When I decided to properly start using the Fediverse via my own Mastodon server, I knew it was probably inevitable that I would end up writing my own server - and, well, here we are!

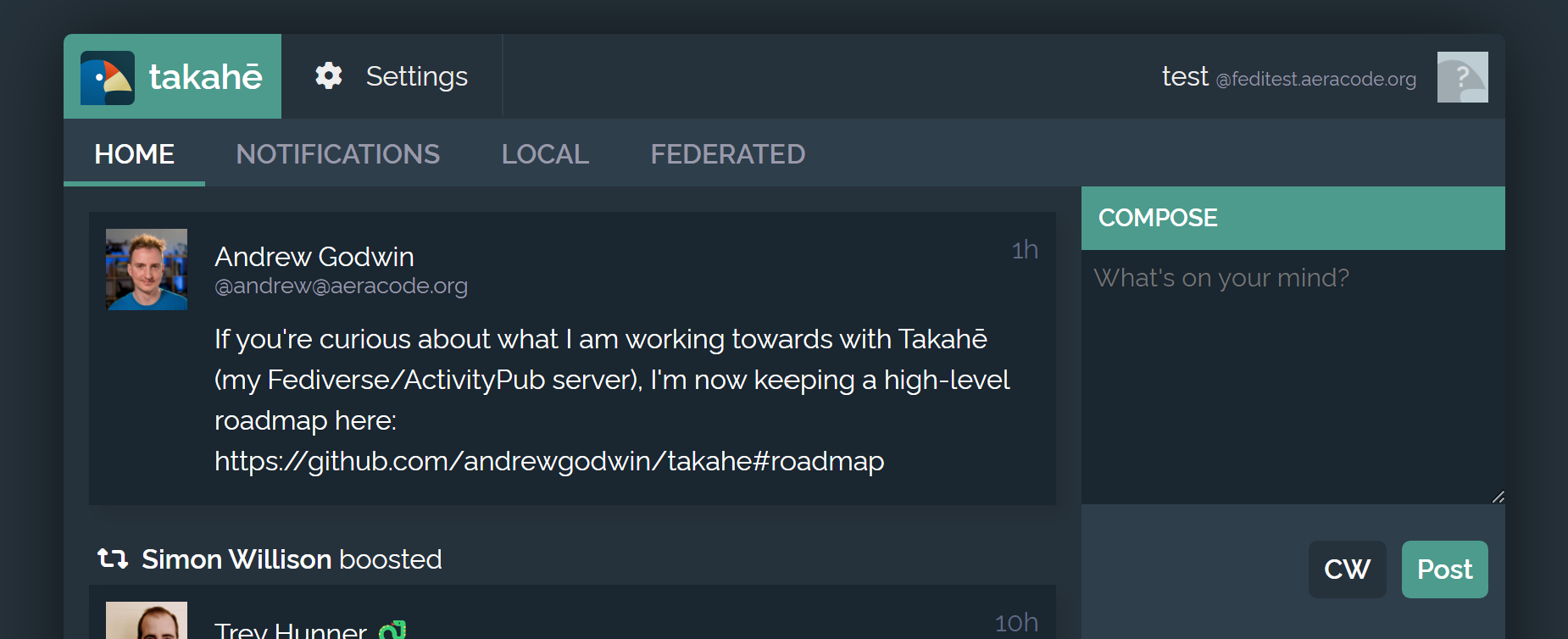

My new server is called Takahē, and it's built in Django and also specifically with Python's async library ecosystem - I'll explain more about why that matters later.

Going into this project, I have a couple of high-level goals I want to achieve that I think are lacking in other servers I've tried:

- The ability to have multiple domains on a single server

- Runs easily on "serverless" hosting (no background processes)

I also want some other key things:

- A simplistic, low-JS user interface that is easy to maintain

- Mastodon client API compatibility so other UIs are available

- A worker system that doesn't get bogged down under the load of ActivityPub

- Reconciliation-loop handling of all state progression

Let's dig into some of these a bit more!

Multiple Domains Per Server

Most ActivityPub servers, like Mastodon, can be configured so that

the accounts have a different domain to the one the server is on - like my

server, which serves the aeracode.org domain but actually runs at

fedi.aeracode.org.

What they don't do, however, is let you have multiple domains per server. If

you want to use your personal domain as part of your Fediverse "handle", you're

forced to set up a whole server just for it - I can't have Mastodon handle

both @andrew@aeracode.org and @tales@fromtheinfra.com on the same server,

for example.

Initially, I thought this might be a restriction in the ActivityPub and Webfinger protocols, but while the way domains are resolved is strange, you can effectively serve multiple domains per server as long as each domain has a unique "service domain" that it serves on - which can be the same as the "display domain", but must be unique otherwise. I'll dig into more about this in future, but just know that it's something that's possible but difficult.

Serverless-Friendly Architecture

I have run all my personal projects on "serverless" hosting for several years now - Google Cloud Run, mostly. Having software only spin up on request and not just sit around is great - not just for efficiency, but because it enforces a shared-nothing architecture on you that makes things easy to maintain.

ActivityPub, however, is a protocol with a lot of background messaging. Every post has to be delivered to lots of individual (or shared) inboxes, you have to look up Actor information and public keys, and more. Mastodon uses Sidekiq - a classic task scheduler - to do this, but it needs a whole bunch of workers to be running at all times.

I instead want a design where all I need to deploy is a Docker image, a database, and then set up a scheduled task call a URL every X seconds - these are all primitives that are easily available on any modern serverless platform.

In theory you could try and do a lot of processing during the "inbox" requests, but you still need a way to send things to other inboxes, and to retry things.

Of course, if you were to design a traditional worker system that handled a single job at a time per process, running it as scheduled HTTP requests would be inefficient. However, thanks to Python's async support, we can pack a few hundred tasks into that same space instead, making it more efficient!

It's not going to compete with a true asynchronous task queue for throughput, but I suspect it will easily make it to the thousands-of-users range, and I've also left room so that I can write a traditional long-running worker that drops in and uses the same data storage.

Scaling

Now, it's worth stopping here for a bit to talk about something I'm specifically not going after - scalability.

If I wanted to build an amazing, scalable system, then I'd be architecting things differently, and probably using a proper queue rather batching things in the background. (I'd probably also want a team to help me out, but I digress!)

While I do think that the eventual fate of the ActivityPub ecosystem (Fediverse) is a few, big instances where most users live, I'm choosing not to tackle the big-server problem right now, and instead focus on building a system that's reliable and easy to maintain.

Things that are small and easy to maintain are more fun than "big and hard to scale" anyway.

I have a reasonable idea of what my bottlenecks are - the worker system and an over-reliance on complex SQL queries for live logic - but these are also very solveable problems and so if things ever get to the point where I need to solve this - well, hopefully, things are going well, and maybe I have some folks around me to help.

Low-JS Interfaces

While I have nothing but admiration for those engineers who can build and maintain complex single-page applications built out of a tower of JavaScript and build tools, I am a simple person who just likes HTML and CSS.

If I'm building a modern server, I'm going to give it a web UI (so I can add features easily without waiting for clients to catch up) and I'm going to give it an API (so people who are better than I can build fancy clients).

That means I want to make the web UI in a way that I can maintain and change very easily - HTML, forms, links, and the occasional bit of JavaScript mixed in to show and hide elements on demand. HTMX offers an easy way to add a few non-full-refresh elements, too, so that's the plan.

I am also experimenting with Hyperscript as my replacement for jQuery as "thing that toggles elements when buttons are pressed".

It also happens to be what Django is very good at - nothing like being able to lean on the proven ability of the templating and forms libraries.

Reconciliation Loops

One of the architectures for distributed systems that I enjoy the most is the "reconciliation loop" - a central datastore with all of the state, and then a series of stateless worker processes that connect to it, find a thing to progress, progress it, and then loop over and start again.

Kubernetes is a famous example of this, and while I may have some issues with it occasionally, it is never with this part of the design. It's easy to understand, easy to program against, and you end up with only one single source of failure and a limited number of communication links to keep working.

While ActivityPub's data model doesn't lend itself to the exact "make object types and watch them" model that Kubernetes enjoys, there's a similar approach one can make where you give each object type a state machine, and define how to progress from each state to the next, and then just feed that into a similar stateless-worker system - so that's what I've done!

I was tempted to try and make this work more like the Kubernetes model, but AP does heavily assume single instances of things with URIs.

There's no queue of tasks anywhere, just a database design that lets a series of stateless (and short-lived) workers spin up, work out what objects can have progression attempted, run their progression code, and move their state up one notch if they succeed.

What's Next?

I'll be writing detailed blog posts about each of these elements and more over the coming weeks - I have learned a lot about ActivityPub and JSON-LD, and want to add to the corpus of information out there about them (the more info the better!), and I'm also particularly proud of my state-machine-worker system that's all async at the core.

As for Takahē itself, I have a roadmap outlined that gives you an idea of where I've got to and what I'm trying to aim for - at time of writing, it's been 9 days of part-time work to get to a server that hosts identities, receieves posts, sends them, handles following and more, which I'm quite pleased with. It's also been very fun to work on a web app that is entirely self defined and on my own - while I do love leading teams in my professional life, sometimes it's nice to get back to my roots, writing web applications and building dodgy, programmer-designed UIs while listening to electronic music.

My hope is to get a useable alpha/beta out before the end of the year and start having a few people run it and test it out on non-critical accounts and domains; I don't expect anything truly production quality super quickly, but then again I already have multiple domains and serverless-friendly workers both functional, and there's definitely some people out there interested in those!