So, after a few weeks of development, I'm happy enough with the state of Takahē to issue its first official release - which I've chosen to number 0.3.0, because version numbers are made up and I can start where I want.

We're only releasing Docker images right now in order to try and keep the support burden down (it removes having to worry about people's OS versions and library environments), so you can find it on Docker Hub.

This first release is still quite early - I certainly wouldn't try to run a community on it yet, as the moderation features are nonexistent - but it does function, and it's better to get into the release cadence sooner rather than later.

I'm keeping a proper features document with a list of what's supported so far, but I think this first release has most of the basics - posting, boosts, likes, mentions, timelines, following, and multi-server and multi-identity support.

Let's talk about a few of the things I've done over the past couple of weeks and how plans have changed slightly.

"Serverless" Support

One of my stated goals when I started out was to make it easy to run on a "serverless" offering, such as AWS Lambda and Google Cloud Run. Even though ActivityPub requires a lot of background processing (message fanout, retries, identity fetching, and more), I thought that I could get away with the trick originally established by "wp-cron" back in the day - call this URL every minute and it'll do the background work then.

This proved to be a little too much to take on immediately - I was relying on my background task system a bit too much, and it meant that your own posts wouldn't even show up in your timeline until up to a minute later.

I've got this mostly in a good place, and I think it'd work alright, but I'm also defocusing on this as a direct goal as it's probably more reasonable to just have it be very easy to deploy - the "flagship" installation, takahe.social, runs great on a $25 virtual machine running a tiny Kubernetes cluster with a webserver and a worker pod.

Also, to be more realistic, one of Takahē's selling points is the multiple domain support, which means that ideally we can remove the need for people to run their own servers quite as much; thus, I want to focus more on general "this is easy to deploy and operate" rather than this specific use-case.

Besides, there's a new generation of PaaS providers, like fly.io, that can totally run containers full-time as workers, and for a very reasonable price. I'm excited to try that out.

UIs and Client APIs

One of my long-term goals for Takahē is to give it a full Mastodon-compatible client API, so the set of existing mobile apps just work with it. While that API is not particularly hard to replicate, it does have a few quirks and requires a full OAuth implementation, so I'm going to focus on that later on - and instead write a web UI as the primary interface.

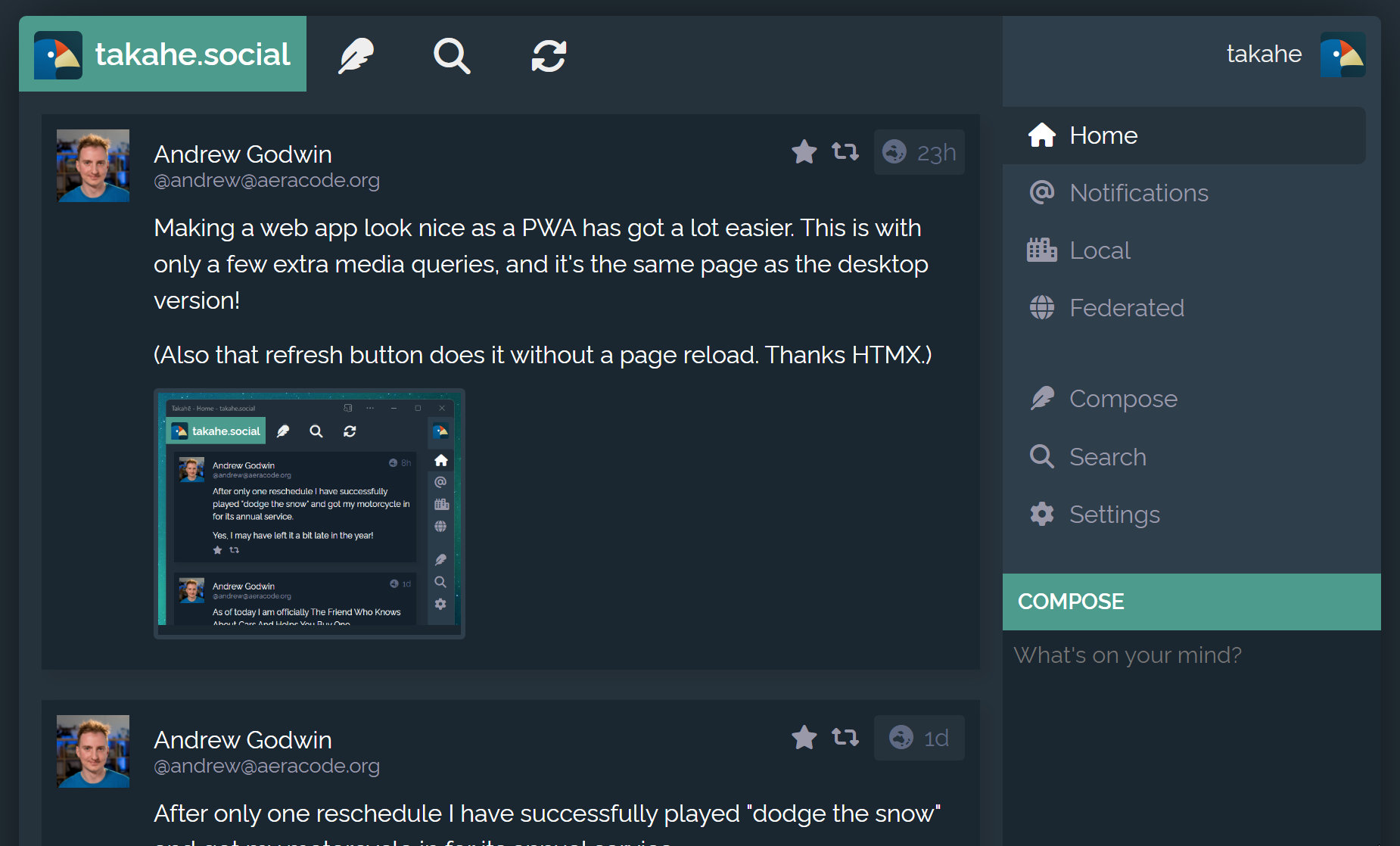

Why write a web UI at all? Well, first of all, I want something I can use on my desktop, and I generally run most things as Edge "applications" (they're PWAs in their own windows).

More importantly, though, the Mastodon Client APIs don't have support for a lot of features you need, especially on the admin and moderation side; while some of these got added in their 4.0 release, you still always need a web UI for the more advanced moderation interface, user settings, server settings, and so on.

Thus, the primary development in terms of UI these first few months will be this web UI - at least until we've hit most of the main features in terms of server support.

It has both a desktop UI (which you can see in the screenshot at the start of this post), and a mobile/PWA UI, which you can see here - they're the same webpage, just with media queries that trigger on smaller screens or if the app is running in a standalone window.

This is also what I call a "low JavaScript" interface - while there is some interactivity, for example to allow the Boost and Like buttons to work, or to have the timeline update without a refresh - it's not required at all, and the majority of the features work with JS turned off.

The interactivity is also all using HTMX to provide a very seamless way of doing "dynamic" content while rendering it all on the server side. It's very easy for me to maintain and improve quickly given my particular set of skills, and that's pretty much the point.

I'm not against having a fancy JavaScript interface, but that's the sort of thing one can write against the client API for any Mastodon-client-compatible server, rather than spend time on specifically for Takahē. Plus, it's not within my skillset - I've tried, and I'm just way more efficient with HTML templates and CSS than modern JavaScript tooling.

The Mysteries of Mastodon

While there is an ActivityPub/Streams spec, it's not entirely sufficient in terms of writing a server; there's a lot of stuff that happens on the side, such as the fact you need to sign GET requests to other servers if you want to just pull profile info (so they can defederate you more easily!)

A large part of this process has been figuring out how all this works, and for that I just did my usual approach - write enough of a system to hook up to the rest of the network, see what messages come my way, and iterate on them.

I primarily did this by tunnelling my remote Django runserver out onto

the public Internet via an SSL reverse proxy running in Google Cloud Run. It

makes my laptop seem like it has a real URL, and that lets other ActivityPub

servers talk to it while I can still print() and debug on my local machine as

things go awry.

At first, I mostly just did things against my own Mastodon server, figuring that if I really screwed up a response I had database access to fix anything weird I sent over. As I expanded, though, I started following some other accounts to make sure I got a good stream of activity - post edits, deletions, media, and more started just turning up in my server's inbox at this point.

The inbox model I have keeps messages around if there's an error processing them, so I could get a message I couldn't handle, write the code to handle it, and then the reconciliation loop would try it again and it'd work this time.

There's some risk of over-indexing on Mastodon with this method - as I saw when I tried to talk to a Pleroma and a GoToSocial instance - but it's not too bad. Those mostly work now, after a few tweaks.

At this point, I have almost the full message set I need to finish developing all the main features, and I have a good idea of what further parts of my data model I'm missing as well (polls are going to need a bit of work!)

What's Next?

Obviously I am going to work down the features list and get cracking on things like "replies" and "attaching images", but I'm also going to start handing out a few invites to beta testers for the flagship instance, takahe.social, to see what we can break.

I have to say, though - aside from the weird signing stuff, and too much RDF hidden inside JSON-LD, this has been a rather decent protocol to implement so far. Once you get the basics down, things all follow a certain pattern, and implementing new things becomes quite reasonable. It bodes well for a future of multiple different small servers and big sites all talking to each other.